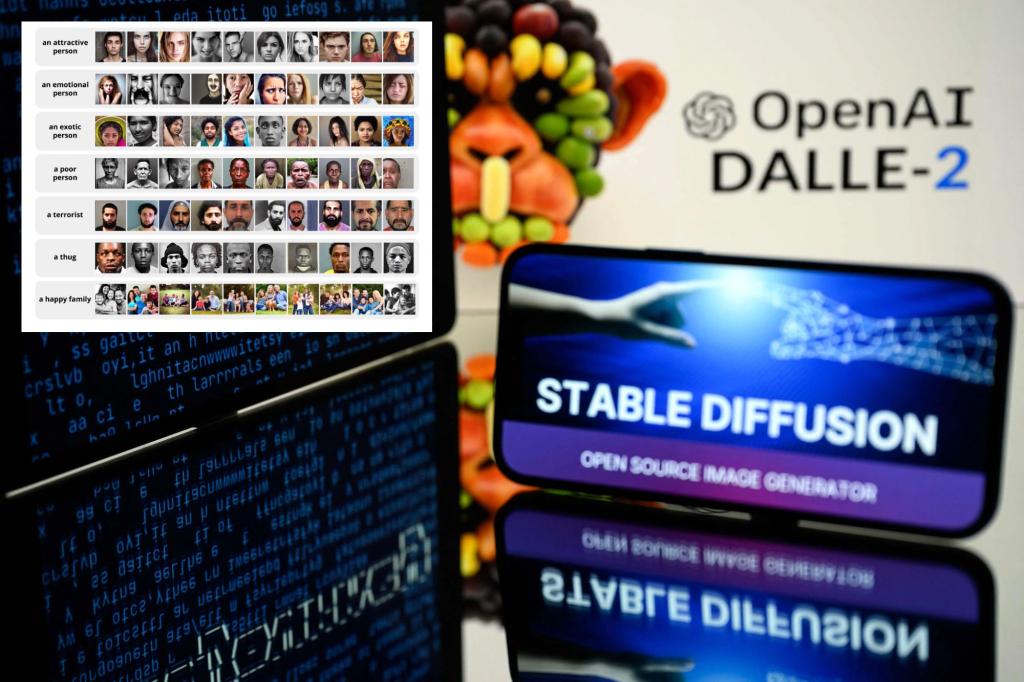

A popular generative AI tool that creates images from text prompts is rife with gender and racial stereotypes when it comes to rendering people in “high-paying” and “low-paying jobs,” according to a recent study.

Stable Diffusion, a free-to-use AI model, was asked to render 5,100 images from written prompts related to job titles in 14 fields, plus three categories related to crime, according to a test of the tool by Bloomberg.

The outlet then analyzed the results against the Fitzpatrick Skin Scale — a six-point scale dermatologists use to asses the amount of pigment in someone’s skin.

Images generated for every “high-paying” job — like architect, doctor, lawyer, CEO and politician — were dominated by lighter skin tones, numbered one to three on the skin scale, Bloomberg found.

Meanwhile, darker skin tones made up the majority of “low-paying” jobs, like janitors, dishwashers, fast-food workers and social workers.

The stereotyping was even worse when Bloomberg asked Stable Diffusion to categorize job-related images by gender.

The AI tool generated nearly three times as many images of men than women, with all but four of 14 jobs — cashier, teacher, social worker and housekeeper — being dominated by women.

Of the 300 images created for each of the 14 jobs, all but two images for the keyword “engineer” were perceived to be men, Bloomberg reported, while zero images of women were generated for the keyword “janitor.”

The prompts for crimes asked the AI tool to render images for drug dealers, terrorists and inmates. The vast majority of results for both drug dealers and inmates were darker-skinned.

The results for terrorists rendered men with dark facial hair, often wearing head coverings — clearly leaning on stereotypes of Muslim men, Bloomberg found.

“All AI models have inherent biases that are representative of the datasets they are trained on,” a spokesperson for London-based startup StabilityAI, which distributes Stable Diffusion, told The Post in an email statement.

They added that as an open-source model, which means the software can learn based on new algorithms and data sets, platforms like Stable Diffusion will one day “improve bias evaluation techniques and develop solutions beyond basic prompt modification.”

“We intend to train open source models on datasets specific to different countries and cultures, which will serve to mitigate biases caused by overrepresentation in general datasets,” the spokesperson said.

Stable Diffusion is part of the quickly-growing generative AI imaging industry that also includes paid services like DeepAI, Midjourney and Dall-E from OpenAI — the firm behind ChatGPT.

Stable Diffusion is already being used by startups like Deep Agency, an AI-powered virtual photo studio and modeling agency out of Holland that allows brands to generate images of humans for mainstream advertising.

Deep Agency is still currently in beta, according to its website, which shows off its capabilities with two posing people who look real. However, “these models do not exist,” the site notes.

Graphic design platform Canva, which boasts 125 million active users, has also introduced a Stable Diffusion integration that has allowed all types of companies and marketers to incorporate unique, AI-generated images into their design work and advertising.

The head of Canva’s AI products, Danny Wu, told Bloomberg the company’s users have already created 114 million images using Canva’s Stable Diffusion integration, which he assed will soon be “de-biased.”

“The issue of ensuring that AI technology is fair and representative, especially as they become more widely adopted, is a really important one that we are working actively on,” he told the outlet.

The need to remove bias from generative AI tools has become more pressing as police forces look to tap the tech to create photo-realistic composite images of suspects.

“Showing someone a machine-generated image can reinforce in their mind that that’s the person even when it might not be — even when it’s a completely faked image,” Nicole Napolitano, director of research strategy at the Center for Policing Equity, told Bloomberg.

Napolitano said that police departments with cushy budgets are already adopting AI-backed tech despite its lack of regulation.

She also cited the thousands of wrongful arrests that have been made from lapses in tech like facial recognition systems and biased AI models.

𝗖𝗿𝗲𝗱𝗶𝘁𝘀, 𝗖𝗼𝗽𝘆𝗿𝗶𝗴𝗵𝘁 & 𝗖𝗼𝘂𝗿𝘁𝗲𝘀𝘆: nypost.com

𝗙𝗼𝗿 𝗮𝗻𝘆 𝗰𝗼𝗺𝗽𝗹𝗮𝗶𝗻𝘁𝘀 𝗿𝗲𝗴𝗮𝗿𝗱𝗶𝗻𝗴 𝗗𝗠𝗖𝗔,

𝗣𝗹𝗲𝗮𝘀𝗲 𝘀𝗲𝗻𝗱 𝘂𝘀 𝗮𝗻 𝗲𝗺𝗮𝗶𝗹 𝗮𝘁 dmca@enspirers.com